Welcome to the Future of Play: Where Arduino Meets Imagination

Remember those lazy afternoons with toys that just sat there, waiting to be played with? Well, those days are officially over. Imagine a toy that not only responds but interacts—reacting to touch, sound, or even the tiniest movement. Thanks to Arduino and a handful of clever sensors, the realm of interactive electronic toys is no longer the stuff of sci-fi dreams but a DIY wonderland accessible to hobbyists and parents alike.

Why Arduino? Because Simplicity Meets Power

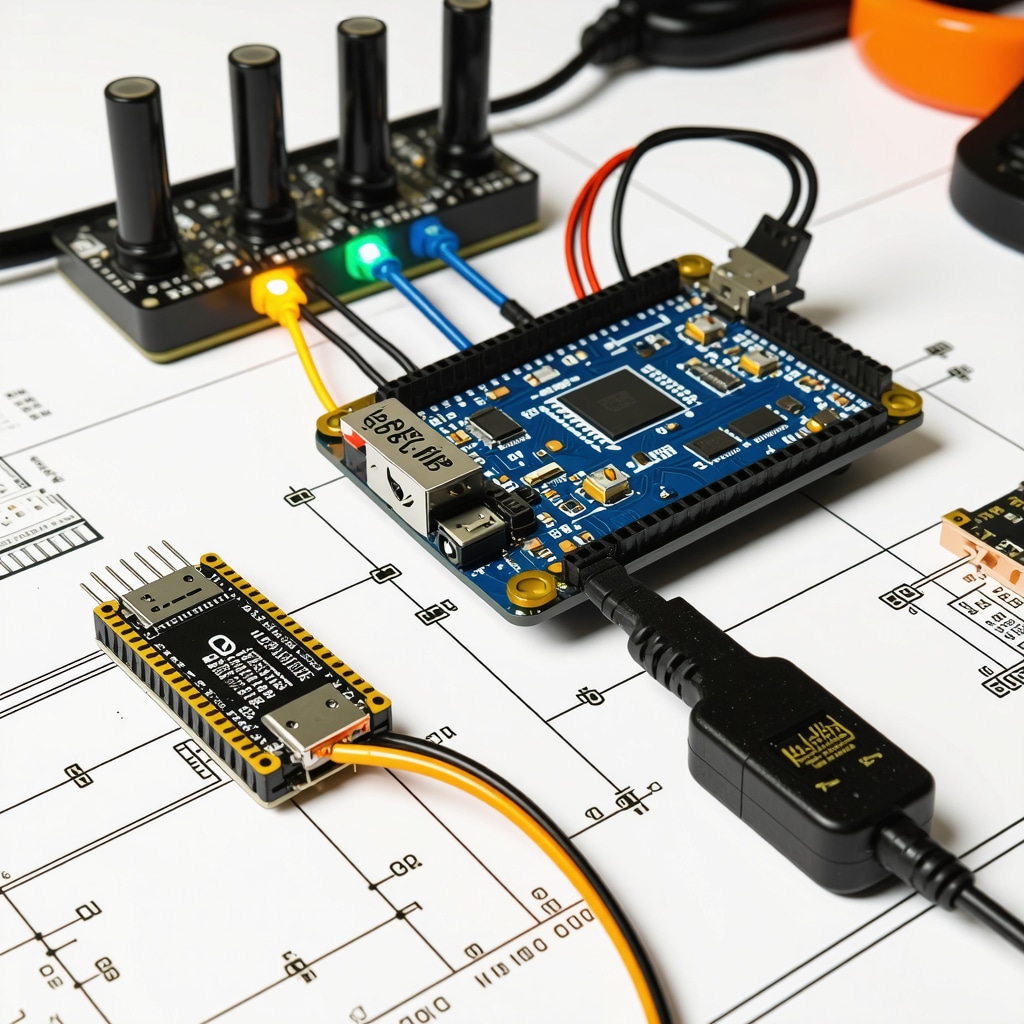

Arduino boards are like the Swiss Army knives of the electronics world—compact, affordable, and remarkably versatile. Whether you’re crafting a sensor-driven robot or a musical toy that changes tune with proximity, Arduino is the heart that brings your creation to life. Pair it with sensors like ultrasonic distance detectors, touch sensors, or accelerometers, and suddenly, your toy isn’t just a toy—it’s an experience.

Is It Really That Easy to Build Interactive Toys at Home?

That’s the million-dollar question. The short answer: yes, with a pinch of patience and a dash of curiosity. The beauty of using Arduino lies in its vast community and treasure trove of tutorials that guide you from blinking LEDs to complex sensor integration. For instance, a simple project could involve a toy car that stops when it senses an obstacle, teaching kids about cause and effect in a fun, hands-on way.

Sensor Spotlight: The Magical Eyes and Ears of Your Toy

Think of sensors as your toy’s senses. Ultrasonic sensors detect distance like a bat’s echolocation, while light sensors allow toys to react to changing brightness, and sound sensors can trigger actions when someone claps or speaks. Integrating these sensors with Arduino transforms your toy from static to dynamic, creating layers of interactivity that captivate and educate simultaneously.

From Concept to Creation: The Joy of DIY

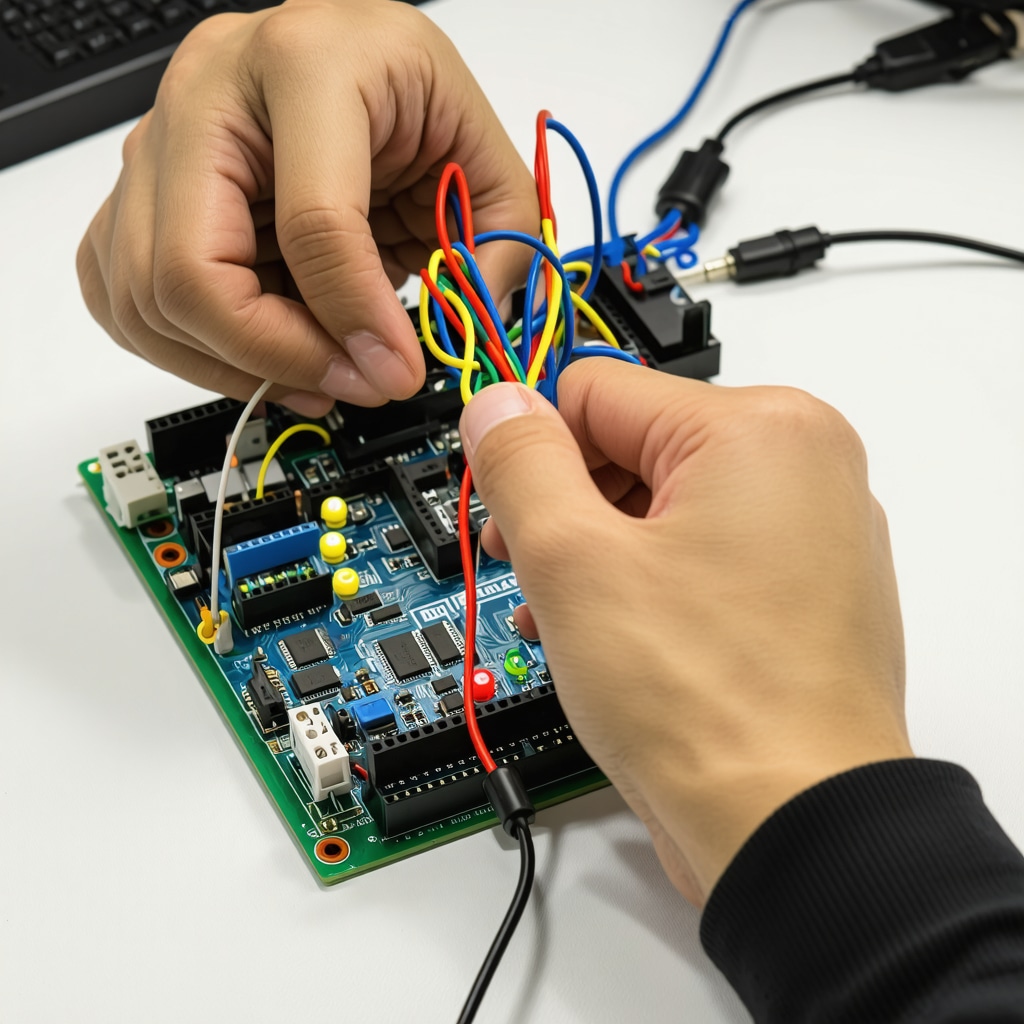

Building interactive toys isn’t just about electronics; it’s about storytelling and creativity. Combining your tech skills with DIY crafting—think custom paint, quirky cases, or even natural fiber decorations—elevates your project. If you’re hungry for creative inspiration beyond toys, you might enjoy exploring unique DIY crafts that transform everyday spaces.

Ready to Dive In? Share Your Interactive Toy Ideas!

Got a spark of invention or a quirky concept for an Arduino-powered toy? Dive into the conversation and let’s build a community of makers who believe playtime can be as educational as it is fun. Your feedback and stories might inspire the next big interactive toy sensation!

For a deep dive into sensor integration and Arduino basics, the official Arduino tutorials are an authoritative resource that can kickstart your journey with confidence.

Expanding Horizons: Advanced Sensor Integration for Next-Level Toys

Once you master the basics of Arduino and sensor use in toys, the real fun begins by pushing the boundaries of interactivity. Imagine incorporating multi-sensor fusion—combining inputs from ultrasonic sensors, accelerometers, and even temperature sensors to create toys that respond to their environment with nuanced behaviors. For example, a robotic pet toy could sense not just proximity but also changes in ambient temperature and motion, adjusting its “mood” or actions accordingly. This complexity enhances user engagement and introduces sophisticated STEM concepts in an intuitive, playful manner.

How Can We Leverage Machine Learning with Arduino to Create Smarter Toys?

Integrating machine learning (ML) models with Arduino platforms is becoming increasingly feasible, opening doors to toys that learn from interactions rather than following fixed programming. By incorporating lightweight ML algorithms, you can develop toys that adapt to a child’s play style or preferences over time. For instance, gesture recognition through accelerometer data or voice command interpretation via sound sensors can be implemented to create an evolving play experience. Resources such as TensorFlow Lite for Microcontrollers provide accessible tools to embed these capabilities on Arduino-compatible hardware, empowering makers to innovate at the intersection of AI and DIY electronics.

For detailed guidance on implementing ML on microcontrollers, the TensorFlow Lite for Microcontrollers official site offers authoritative tutorials and case studies that elevate your DIY projects to professional-grade smart devices.

Designing with Purpose: Balancing Creativity and Practicality in DIY Toys

Advanced projects benefit from thoughtful design that prioritizes user safety, durability, and ease of maintenance. Selecting materials that complement your electronics, such as sustainable woods, non-toxic paints, or natural fibers, ensures your creations are not only impressive but safe for children. Moreover, modular design approaches—where components like sensors or batteries are easily replaceable—extend the lifespan of your DIY toys and enhance their educational value by allowing iterative improvements based on feedback.

If you’re inspired to explore creative yet functional DIY projects beyond toys, discover unique DIY crafts that transform everyday spaces—a perfect complement to your tech-savvy creations.

Have You Experimented with Combining Traditional Crafting and Arduino Technology in Your Projects?

We invite you to share your experiences blending handcrafting techniques with Arduino-powered electronics. How do you balance aesthetics with functionality? Your insights could inspire others to push the boundaries of DIY innovation.

Mastering Multi-Sensor Fusion: Crafting Toys with Context-Aware Intelligence

To truly elevate the interactive toy experience beyond basic sensor responses, embracing multi-sensor fusion is paramount. This technique involves synthesizing data from diverse sensors—such as ultrasonic distance modules, gyroscopes, temperature sensors, and microphones—enabling the toy to interpret and react to its environment with remarkable context sensitivity. For instance, a robotic companion could modulate its behavior when it detects a child approaching (via proximity sensors), changes in ambient light (using photodiodes), and even the tone of the child’s voice (through audio analysis), simulating emotional responsiveness.

Implementing sensor fusion requires thoughtful data processing strategies, often involving filtering algorithms like Kalman or complementary filters to merge noisy sensor inputs into coherent signals. These approaches not only improve reliability but also enable the toy to make decisions based on patterns rather than isolated triggers. The result is a more fluid, lifelike interaction that captivates users and subtly introduces them to complex concepts in signal processing and embedded systems.

What Are the Best Practices for Synchronizing Multiple Sensor Inputs on Resource-Constrained Arduino Boards?

Given Arduino’s limited memory and processing power, optimizing sensor data synchronization is a nuanced challenge. Experts recommend prioritizing sensor update rates to balance responsiveness and computational load. Utilizing interrupt-driven data acquisition can improve real-time performance by ensuring critical sensor events are processed promptly. Additionally, lightweight algorithms—such as moving average filters or threshold-based event detection—offer efficient alternatives to complex machine learning models when operating under tight resource constraints.

Memory management is equally crucial. Employing circular buffers for sensor data storage and leveraging Arduino’s PROGMEM for storing static data can prevent memory fragmentation. Strategic use of low-power modes between sensor readings further optimizes energy consumption, particularly important for battery-powered toys.

For an in-depth exploration of sensor data fusion techniques tailored to microcontrollers, consider reviewing the comprehensive guide by Simon D. Levy in “Practical Embedded Sensor Fusion on Arduino” (embedded.com), which provides hands-on examples and performance optimization tips.

Augmenting Play with Adaptive Machine Learning: Crafting Toys That Grow Smarter

The integration of machine learning (ML) on Arduino platforms heralds a transformative leap for DIY interactive toys. Moving beyond static responses, ML-powered toys can analyze play patterns, learn preferences, and evolve their behavior over time, offering personalized experiences that deepen engagement.

Lightweight models such as decision trees or small-scale neural networks can be trained offline on user interaction data and then deployed on Arduino via frameworks like TensorFlow Lite for Microcontrollers. For example, a toy robot could learn to recognize common gestures or voice commands unique to its owner, adapting its responses accordingly. This adaptive capability not only enriches play but also provides an accessible introduction to AI concepts for young learners.

Implementing ML requires careful consideration of model size and inference speed to fit Arduino’s constraints. Techniques such as quantization and pruning reduce model complexity without significantly sacrificing accuracy. Additionally, developers should design intuitive feedback mechanisms so users can understand and interact with the toy’s evolving behaviors effectively.

How Can One Efficiently Implement Gesture Recognition Using Arduino and ML for Interactive Toys?

Gesture recognition typically involves processing accelerometer and gyroscope data to classify movements. By collecting labeled gesture datasets, developers can train classifiers like k-Nearest Neighbors (k-NN) or Support Vector Machines (SVM) offline. These models, once simplified, can run on Arduino to interpret real-time sensor inputs.

To optimize performance, sensor data preprocessing—such as normalization and feature extraction (mean, variance, FFT components)—is essential before feeding inputs into the ML model. Furthermore, leveraging Arduino-compatible ML libraries like Edge Impulse or TensorFlow Lite Micro simplifies deployment and offers tools for continuous learning and model updates.

For practical tutorials and example projects on embedded ML for gesture recognition, visit the Edge Impulse platform, which offers community-contributed models and comprehensive guides.

Innovative Power Solutions: Sustaining Your Interactive Toy’s Lifelong Play

Power management is a critical yet often overlooked aspect of DIY interactive toys. Balancing performance with battery life demands innovative solutions. Consider integrating energy harvesting modules, such as small solar panels or piezoelectric elements, to supplement battery power during play. Coupling these with power-efficient components and sleep-mode programming can significantly extend operational time.

Modular battery compartments with hot-swappable lithium-polymer packs enhance user convenience and safety. Additionally, incorporating voltage regulation circuits ensures consistent sensor and microcontroller performance, preventing erratic behavior due to power fluctuations.

What Are Cutting-Edge Strategies for Energy-Efficient Arduino Toy Designs?

Advanced techniques include dynamic voltage scaling and duty cycling, where sensors and processors operate intermittently or at reduced voltages when full performance is unnecessary. Employing ultra-low-power sensor variants and optimizing code for minimal CPU wake time also contribute to energy savings.

Designers are increasingly adopting wireless charging coils embedded within toy cases, providing seamless rechargeability without dismantling. These approaches not only prolong playtime but also enhance user experience by reducing maintenance hassles.

For further reading on power optimization in embedded systems, refer to the seminal research article “Energy-Efficient Design Techniques for Embedded Systems” published in IEEE Transactions on Very Large Scale Integration (VLSI) Systems (IEEE Xplore).

Embracing these advanced design philosophies paves the way for interactive toys that are not only intelligent and engaging but also sustainable and user-friendly. Ready to push your DIY projects even further? Join our community forum to exchange ideas, troubleshoot challenges, and showcase your latest Arduino-powered innovations!

Harnessing Edge AI: Elevating Arduino Toys with On-Device Intelligence

As Arduino-powered interactive toys evolve, integrating edge artificial intelligence (AI) capabilities allows these creations to transcend preprogrammed responses and engage in real-time learning and adaptation. Edge AI empowers toys to process complex sensor data locally without relying on cloud connectivity, ensuring faster reactions, enhanced privacy, and reduced latency. This technological leap is especially impactful in toys designed for personalized learning experiences, where adapting to a child’s unique interaction patterns is crucial.

What Are the Challenges and Solutions When Deploying Edge AI Models on Memory-Constrained Arduino Boards?

Deploying AI models on Arduino microcontrollers demands meticulous optimization due to limited RAM, flash memory, and processing power. Model compression techniques such as quantization, pruning, and knowledge distillation reduce model size and computational requirements while maintaining accuracy. Additionally, selecting lightweight architectures like TinyML-optimized convolutional neural networks or decision trees tailored for microcontrollers can significantly improve feasibility.

Frameworks like TensorFlow Lite for Microcontrollers offer comprehensive tools to convert and deploy models efficiently, while development platforms such as Arduino Nano 33 BLE Sense provide enhanced sensor arrays and increased memory to facilitate AI integration. Managing inference scheduling and employing efficient data preprocessing pipelines are vital to balancing performance and power consumption.

For in-depth technical guidance, the TinyML community resources provide expert tutorials, benchmark datasets, and best practices for embedded machine learning on constrained devices.

Designing Immersive Feedback Loops: Marrying Multi-Sensor Fusion with Haptic and Visual Responses

Crafting truly immersive interactive toys involves blending sophisticated sensor fusion with nuanced feedback mechanisms. Combining ultrasonic, inertial, and environmental sensors enables context-aware decision-making, which can be complemented by haptic actuators, RGB LEDs, and sound modules to create multisensory engagement. This synergy fosters emotional connection and deeper cognitive stimulation.

For example, a DIY robotic pet equipped with combined sensor fusion algorithms can detect proximity, motion, and ambient conditions, then respond with gentle vibrations mimicking heartbeat rhythms or color changes indicating mood states. Such designs encourage empathy and enhance user experience beyond mere novelty.

How Can Developers Optimize Real-Time Feedback Synchronization in Complex Arduino-Based Toys?

Achieving seamless synchronization requires prioritizing sensor input processing through interrupt-driven architectures and employing real-time operating systems (RTOS) when feasible. Efficient task scheduling and buffering strategies prevent latency and jitter in feedback delivery. Moreover, leveraging peripheral DMA (Direct Memory Access) where supported can offload data transmission, minimizing CPU overhead.

Optimizing code paths with state machines and event-driven programming ensures responsive and power-conscious operation. Developers should also consider modular hardware design to isolate feedback components, facilitating easier debugging and iterative refinement.

Eco-Conscious Electronics: Integrating Sustainable Materials and Energy Harvesting into Arduino Toys

Sustainability is increasingly vital in DIY electronics. Beyond employing non-toxic paints and natural fibers as highlighted previously, integrating energy harvesting technologies like miniature solar cells or triboelectric generators can reduce reliance on disposable batteries. Combining these with supercapacitors or rechargeable lithium-ion batteries extends operational life and promotes eco-friendly play.

Furthermore, sourcing biodegradable or recycled plastics for enclosures and designing for easy disassembly supports circular economy principles. Engaging children with environmentally mindful projects not only sparks creativity but also cultivates responsible innovation.

Explore more about eco-conscious crafting in the DIY natural earth paints and eco-friendly pigments guide for inspiration on integrating sustainability into your projects.

What Innovative Power Management Techniques Are Emerging for Sustainable Arduino Toy Designs?

Advanced power management now encompasses adaptive power scaling, where microcontrollers dynamically adjust clock speeds and voltage based on workload. Energy harvesting circuits are becoming more efficient with maximum power point tracking (MPPT) to optimize solar input. Additionally, ultra-low-power wireless protocols like Bluetooth Low Energy (BLE) 5.2 enable connectivity while conserving battery.

Researchers are also exploring bioenergy harvesting and flexible energy storage to create self-sustaining toys. Staying current with these trends ensures your DIY creations remain cutting-edge and environmentally responsible.

For technical deep dives into sustainable embedded system design, the Journal of Sustainable Electronics and Energy Systems offers peer-reviewed articles and case studies accessible via IEEE Xplore.

Join the Maker Movement: Share Your Advanced Arduino Toy Innovations and Insights

Are you pioneering new frontiers in Arduino-powered interactive toys? Whether experimenting with edge AI, multi-sensor fusion, or eco-conscious design, your experiences enrich the vibrant DIY community. We invite you to share your projects, challenges, and breakthroughs—engage fellow makers and spark collaborative innovation.

Discover more advanced project ideas and join the conversation at Create Interactive Arduino Toys: Integrate Sensors for Learning, and let’s push the boundaries of playful technology together!

Expert Insights & Advanced Considerations

Harnessing Multi-Sensor Fusion for Contextual Intelligence

Integrating multiple sensor modalities—such as ultrasonic, inertial, and environmental sensors—transforms Arduino-powered toys from reactive gadgets into context-aware companions. By applying data fusion algorithms like Kalman filters, developers can reconcile noisy inputs into coherent situational awareness, enabling nuanced and lifelike responses that deepen user engagement and introduce embedded systems concepts effectively.

Optimizing Edge AI Deployment on Resource-Constrained Microcontrollers

Deploying machine learning models on Arduino boards demands strategic model compression techniques including quantization, pruning, and knowledge distillation to fit limited memory and processing capacity. Leveraging frameworks like TensorFlow Lite for Microcontrollers and selecting ultra-lightweight architectures empowers developers to embed adaptive intelligence, enabling toys to personalize interactions without cloud dependency.

Sustainable Design: Marrying Eco-Friendly Materials with Energy Harvesting

Embracing sustainability in interactive toy design involves using biodegradable or recycled materials alongside energy harvesting solutions such as miniature solar panels and triboelectric generators. This approach not only prolongs operational life but also educates users on environmental responsibility, aligning DIY innovation with circular economy principles through modular, repairable designs.

Real-Time Feedback Synchronization for Immersive Play

Achieving seamless interaction necessitates prioritizing interrupt-driven sensor processing and efficient task scheduling to minimize latency between sensor input and multimodal feedback—haptic, visual, and auditory. Employing state machines and event-driven architectures enhances responsiveness, while modular hardware facilitates iterative refinement and debugging in complex systems.

Power Management Strategies for Extended Toy Lifespan

Employing dynamic voltage scaling, duty cycling, and optimized sleep modes extends battery life significantly in Arduino toys. Incorporating hot-swappable battery compartments and voltage regulation circuits ensures safety and consistent performance. Emerging wireless charging technologies further enhance user convenience and sustainability, reducing downtime and maintenance.

Curated Expert Resources

Practical Embedded Sensor Fusion on Arduino – Simon D. Levy’s comprehensive guide on embedded.com details hands-on techniques and optimization strategies for fusing sensor data on constrained devices.

TensorFlow Lite for Microcontrollers – Offering tutorials and tools at tensorflow.org, this resource is invaluable for integrating ML on Arduino platforms effectively.

TinyML Community Resources – The TinyML website provides expert tutorials, datasets, and best practices focused on machine learning for microcontrollers.

Energy-Efficient Design Techniques for Embedded Systems – A seminal article available via IEEE Xplore exploring advanced power optimization methods applicable in Arduino toy design.

DIY Natural Earth Paints and Eco-Friendly Pigments Guide – Found at diykutak.com, this guide inspires sustainable aesthetics in crafting projects.

Final Expert Perspective

Designing interactive Arduino toys today is an intricate dance of technology, creativity, and responsibility. The advanced integration of multi-sensor fusion and edge AI empowers toys to transcend static play, offering adaptive, immersive experiences that captivate and educate. Coupling these innovations with sustainable materials and intelligent power management not only extends toy lifespan but also aligns DIY creativity with environmental stewardship. For those ready to elevate their projects, exploring these expert insights and resources will pave the way toward truly next-generation interactive toys. Engage further with the community and enrich your journey by visiting Create Interactive Arduino Toys: Integrate Sensors for Learning, and discover how thoughtful design can shape the future of play.

This post really sparks excitement about how Arduino has revolutionised DIY interactive toys. I’ve recently started working on a small project combining an ultrasonic sensor with a buzzer to create a ‘mood toy’ that reacts when someone waves a hand nearby. It’s incredible how even a basic sensor setup can instantly transform a simple object into something engaging and playful. What fascinates me is the blend of technology and creativity—the idea of pairing sensors with storytelling elements to craft unique experiences. I’m curious about how others approach the balance between technical complexity and accessibility, especially when introducing kids or beginners to these projects. Do you find it more fulfilling to start simple and gradually add layers of sensors and features, or is it better to dive right into multi-sensor fusion and advanced behaviour? I’d love to hear about strategies that keep the process fun and educational without becoming overwhelming. Also, has anyone experimented with combining traditional crafting methods with Arduino to personalise the aesthetics while keeping the interactivity smart and reliable? Sharing these experiences would definitely inspire the community to push their creative boundaries further.

Emily, I really resonate with your point about balancing complexity and accessibility when introducing Arduino projects to beginners. From my experience, especially working with kids, starting with simple sensor integrations—like a basic touch or light sensor—helps build confidence and understanding before layering in more complex concepts like multi-sensor fusion or adaptive behaviours. This gradual approach keeps things fun and prevents overwhelm. Regarding combining traditional crafting with Arduino, I’ve found that customising toy casings with sustainable materials like bamboo or recycled plastics not only personalises the project aesthetically but also encourages kids to think about eco-conscious design. Plus, hand-painted details can give the toy a unique character that electronics alone can’t achieve. I’m curious if others have tried similar blends of traditional art and tech? Also, has anyone experimented with modular sensor attachments that allow easy swapping and upgrades? It seems like an elegant way to expand functionality while keeping the base project manageable. Your project sounds like a fantastic example of how simple sensor setups can create engaging experiences—I’d love to hear about any lessons learned or creative challenges you faced along the way!

I really appreciate this post’s enthusiasm for Arduino as a gateway to turning toys into interactive, educational tools. Having dabbled with Arduino projects myself, I find the concept of sensors being the ‘senses’ of a toy particularly resonates—especially ultrasonic sensors for proximity detection. It’s fascinating how these simple components can breathe life into otherwise static objects.

Addressing the balance between complexity and accessibility, I’ve found it rewarding to start with straightforward sensor integration, such as a basic touch sensor, before gradually introducing multi-sensor fusion. This tiered approach prevents designers and young learners from feeling overwhelmed while still encouraging creativity.

Regarding the aesthetic side, I’ve combined natural fibers and environmentally friendly paints with Arduino circuits to create toys that not only perform smart interactions but also carry a pleasing tactile quality. It’s a great way to blend technology and traditional crafting, enhancing the toy’s appeal and promoting sustainability.

I’m curious if anyone has explored integrating adaptive machine learning models on these toys to personalise interactions? Also, how do you all manage power efficiency without compromising response time in interactive toys, especially when adding multiple sensors? It’d be great to hear practical tips or success stories in balancing these factors!

This post really highlights how Arduino and sensors are transforming DIY toys from static to truly interactive experiences. I recently worked on a project involving an ultrasonic sensor to create a playful obstacle-avoidance feature in a robot arm for kids. Even with basic components, the way it engages children and sparks curiosity is impressive. I believe one of the most exciting aspects is how accessible it is for hobbyists and even educators who want to make learning about tech fun and tangible.

What’s everyone’s thoughts on scaling these projects for real-world applications? For instance, would integrating features like multi-sensor fusion and edge AI be feasible for small hobby projects due to hardware constraints? Also, I’ve seen some innovative ideas around modular sensors that make upgrades and customization easier; has anyone here experimented with plug-and-play sensor modules? It seems like a practical way to extend the lifespan and functionality of a DIY interactive toy. Would love to hear your experiences or tips on balancing complexity with usability in such creative projects!

This article really opens up exciting possibilities for creating more responsive and immersive toys using Arduino. I recently experimented with combining ultrasonic sensors and sound modules to develop a toy that reacts to environmental cues—making play more interactive and educational. It’s fascinating how even simple sensor integrations can elevate the overall experience, transforming static toys into engaging learning tools. In my projects, I’ve found that starting small with core sensors allows for a better understanding of how each component works individually before combining them for more complex behaviours. This approach also makes troubleshooting easier. I’d love to hear how others are managing power consumption when adding multiple sensors and feedback mechanisms—especially for battery-powered toys. What strategies have you all found most effective for balancing functionality with longevity? Also, with the rapid development of machine learning on microcontrollers, do you think lightweight models will soon become standard for smarter toys, or is it better to keep things simple for now? Looking forward to learning from everyone’s experiences here.